Overview

Processing for HSC SSP data is done using the HSC pipeline, a custom version of the LSST Data Management codebase, specialized and enhanced for Hyper-Suprime Cam processing by software teams at NAOJ, IPMU, and Princeton. Most of the pipeline code was written from scratch for LSST and HSC, with significant algorithmic heritage from the SDSS Photo and Pan-STARRS Image Processing Pipelines. The HSC pipeline can also be used for general-observer HSC or custom processing of SSP data; see documentation as well as this site for open-use observers for basic installation and use instructions. With more effort, it can also be used to process data from other cameras, though it may be easier to use the main LSST codebase for this purpose.

There are four major processing stages:

- Single-Visit Processing

- Internal Calibration

- Image Coaddition

- Coadd Measurement

We also have two optional stages that are not regularly run as part of the official processing:

- Forced Photometry (on individual exposures)

- Image Subtraction (for transient detection)

Single-Visit Processing

For each CCD in an exposure (“visit”), we:

- Perform low-level detrending: apply bias and flat-field corrections, mask and interpolate defects

- Estimate and subtract the background

- Detect and measure bright sources (basic centroiding, aperture photometry, and moments-based shapes)

- Select isolated stars and model the point-spread function, using a modified version of PSFEx

- Find and interpolate cosmic rays (using morphology, not image differences)

- Fit an initial World Coordinate System (WCS) and approximate photometric zero-point by matching to a Pan-STARRS reference catalog

- Detect, deblend, and measure faint sources. Includes PSF fluxes as well as all algorithms run previously

All of the above steps are run separately on each CCD in parallel. We then fit an updated (but not yet final) WCS across all CCDs, making use of the fixed positions of the CCDs within the focal plane.

Internal Calibration

Using measurements of stars from the previous stage, we solve for a final WCS solution with higher-order distortion terms and a spatially-varying photometric zero-point by requiring self-consistent fluxes and centroids for each star.

This procedure is carried out in approximately 1.7×1.7 degree “tracts” that tile the sky (with small overlaps). We rely on the reference catalog and observations in the overlap regions to ensure consistent solutions between tracts.

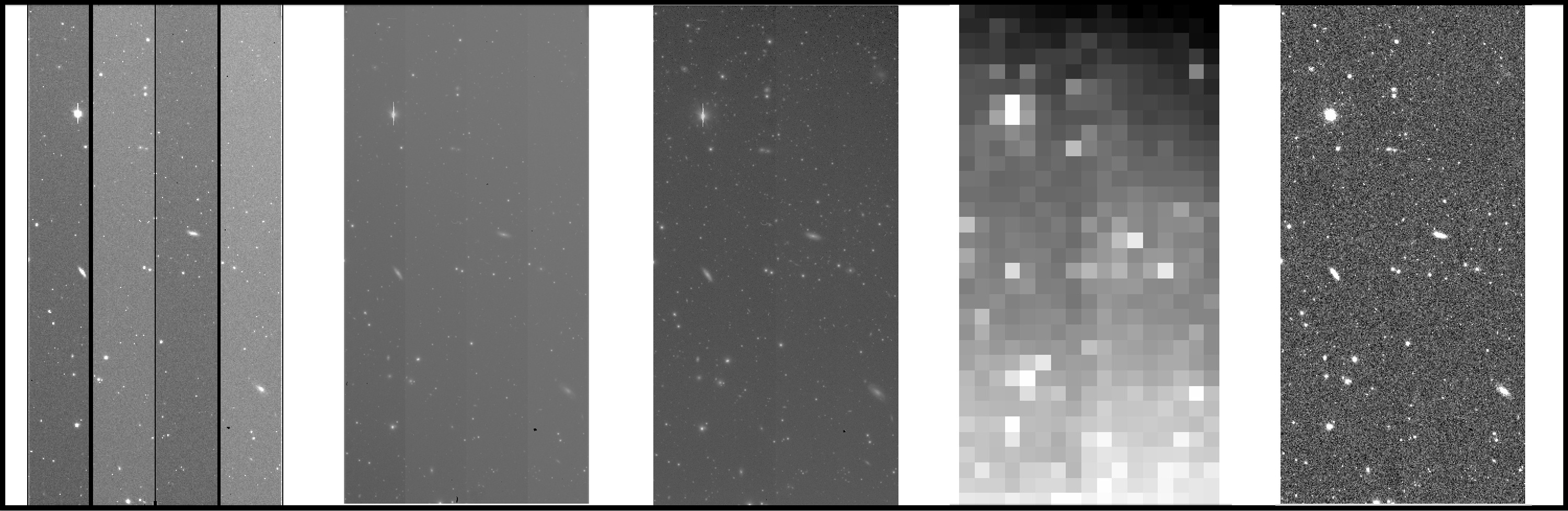

Image Coaddition

All CCD images overlapping a tract are resampled onto a rectangular coordinate system centered on that tract, and then averaged together to build a combined image, called a “coadd”. This is done separately for each band. Instead of matching the point-spread functions of the input images by degrading images with better seeing, we combine the images as they are, and then construct a PSF model for the coadd image by resampling and combining images of the per-exposure PSF models at the position of every source on the coadd. When the exposures are combined using a simple weighted mean or other linear operator, this operation is formally correct, but is not an optimal combination unless all images have the same PSF. Note that nonlinear image combinations such as medians and sigma-clipped means are not valid when the input images have different PSFs.

To avoid including artifacts (e.g. satellite trails, cosmic rays) or asteroids in the coadd, we actually produce three different coadds. The first is just a simple weighted mean (which does not reject artifacts), and the second uses a sigma-clipped mean (which does). By looking at the difference between these coadds and matching the differences to detections on individual exposures, we can identify these artifacts directly in the original exposures and reject them from final coadd with a simple weighted mean that does not include them. This procedure allows us to detect and reject artifacts better than a simple per-pixel outlier-rejection procedure, and it also allows us to mask regions on the coadd in which one or more exposures were rejected.

Coadd Measurement

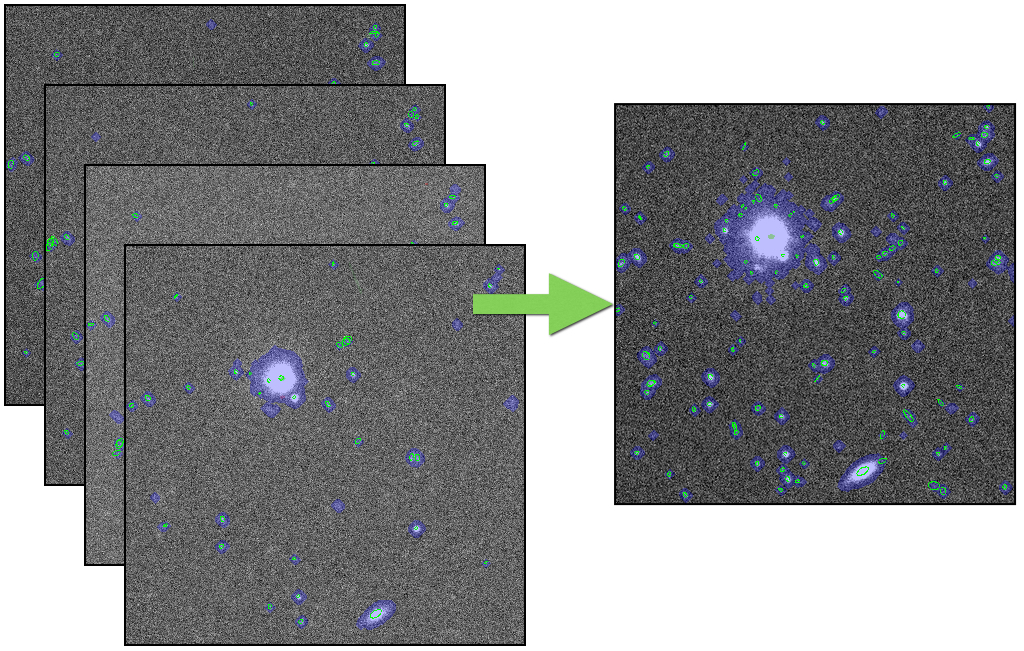

We detect sources on each per-band coadd separately, but before deblending and measuring these sources we first merge them across bands. Above-threshold regions (called “footprints”) from different bands that overlap are combined; peaks within these regions that are sufficiently close together are merged into a single peak. This yields footprints and peaks that are consistent across all bands. We consider each peak to represent a source, and treat all peaks within the same footprint as blended.

We then deblend the peaks within the footprints separately in each band. This assigns a fraction of the flux in each pixel to every source in the blend, allowing them to be measured separately. We again run a suite of centroid, aperture flux, and shape measurement algorithms on these deblended pixel values, as well as PSF and galaxy model fluxes and shear estimation codes for weak gravitational lensing.

After measuring the coadd for each band independently, we then select a “reference band” for each source. For most sources, this is the i-band; for sources not detected (or low signal-to-noise) in i, we use r, then z, y, and g. We then perform “forced” measurement in the coadds in all bands, in which positions and shapes are held fixed at their values from the reference band and only amplitudes (i.e. fluxes) are allowed to vary. This consistent photometry across bands produces our best estimates of colors.

Forced Photometry on Exposures

While forced photometry on coadds is appropriate for colors, forced photometry on individual exposures can provide high signal-to-noise photometry for static objects as well as light curves for variable sources. While code is available to run forced photometry on individual exposures in the pipeline, it is not always run as part of the SSP processing partly because it is computationally expensive and partly because we do not have an algorithm for deblending in exposure-level forced photometry, and hence these measurements are strongly affected by neighbors.

Image Subtraction

Transient detection is best carried out by comparing pairs of images after matching their PSFs. The HSC codebase includes code to do this (essentially unchanged from the LSST pipeline), but it is not yet automated to the same extent as the rest of the pipeline and has received much less testing.